Task-Centric Gesture Analysis

Authors

Zihao Zhan

Institute

OCH Gesture Analysis

Summary

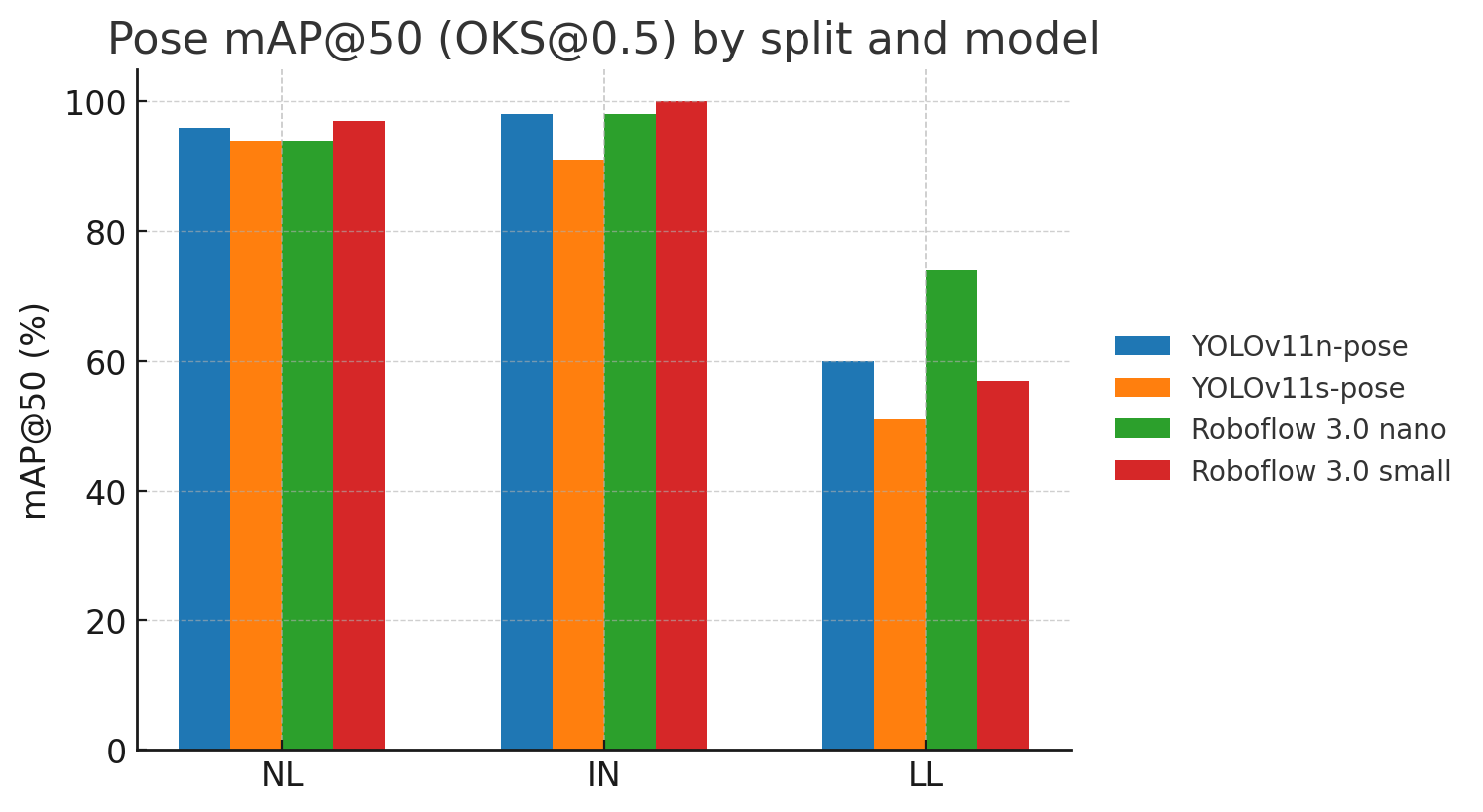

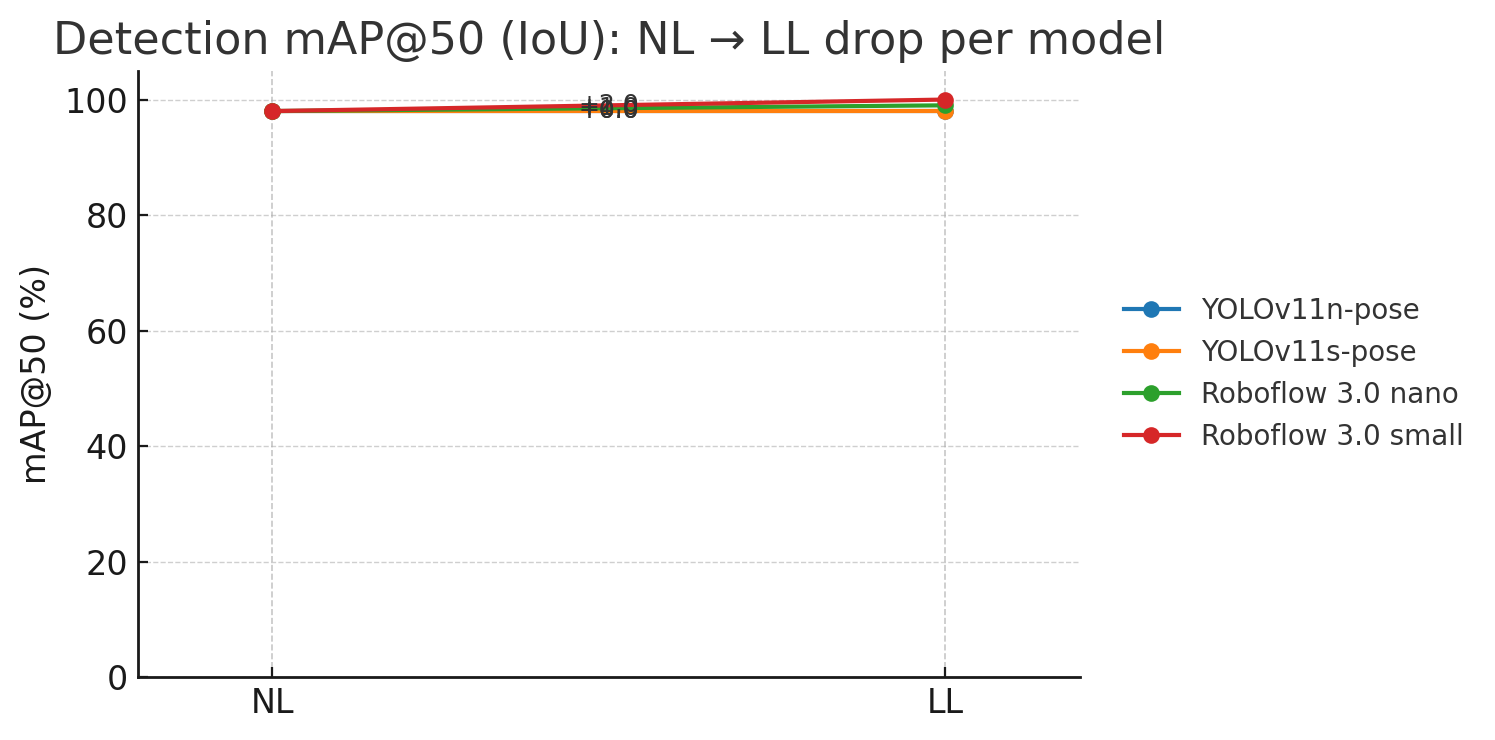

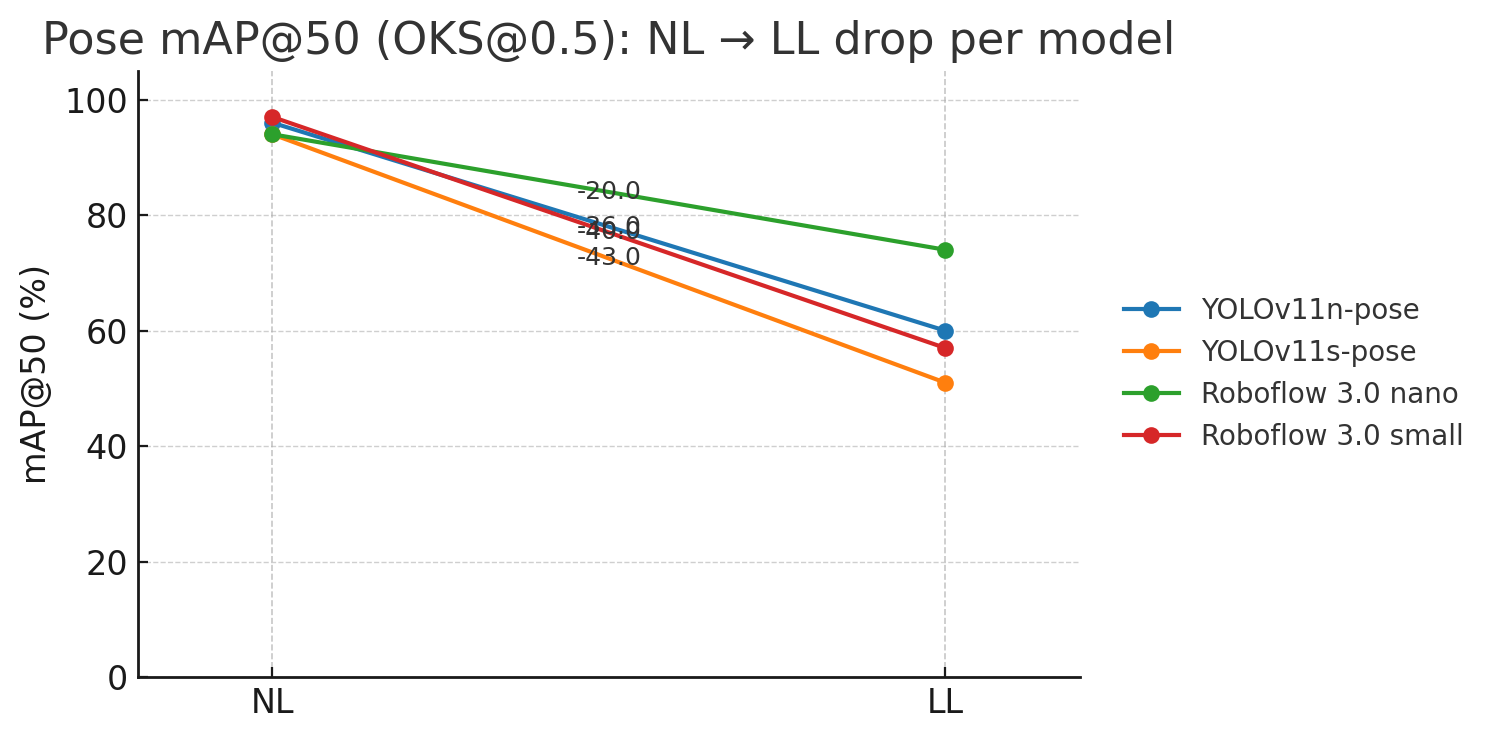

We study robustness in gesture-based HCI from a task–environment perspective. Using two geometrically contrasted gestures (OpenPalm, CloseFist), we train on Normal Light (NL) and evaluate on three test splits: NL, Interference (IN) and Low-Light (LL). We benchmark four single-stage detector–pose models (YOLOv11 n/s pose; Roboflow 3.0 nano/small) with IoU-based box mAP@50and OKS-based pose mAP@50, and report retained performance relative to NL.

- Detection remains near-ceiling across NL/IN/LL.

- Pose is strong in NL/IN but collapses in LL due to information loss/low SNR.

- Surface interference behaves like additive texture (minimal impact), while LL erases fine, visible cues required by keypoints.

Resources

Figures